Learn to program in 10 minutes!

(More realistically the rest of your life)

- Programming is hard

- Be actively lazy

- Don't code, glue

- It begins and ends with the command line

- Use version control

Programming 101

- Always start with working code

- Never ignore misbehaving code

- How do you eat an elephant?

- Just google it!

- For the love of god, use version control

The sweet spot

Taco bell programming

- Do not overthink things

- Use pre-existing basic tools

- Functionality is an asset but code is a liability

The task

- Write a webscraper that pulls down a million webpages

- Search all pages for references of a given phrase

- Parallelise the code to run on a 32 core machine

The solution

- EC2 elastic compute cloud?

- Hadoop nosql database?

- SQS ZeroMQ?

- Parallelising using openMPI or mapreduce?

The ingredients

- cat

- wget

- grep

- xargs

Download a million webpages

cat webpages.txt | xargs wget

Search for references to a given phrase...

grep -l reddit *.*

...and if found then do further processing

grep -l reddit *.* | xargs -n1 ./process.sh

parallelise to run on 32 cores

grep -l reddit *.* | xargs -n1 -P32 ./process.sh

Turning it into a program

#!/bin/bash

cat webpages.txt | xargs wget -P pages

grep -l reddit pages/*.* | xargs -n1 -P32 ./process.sh

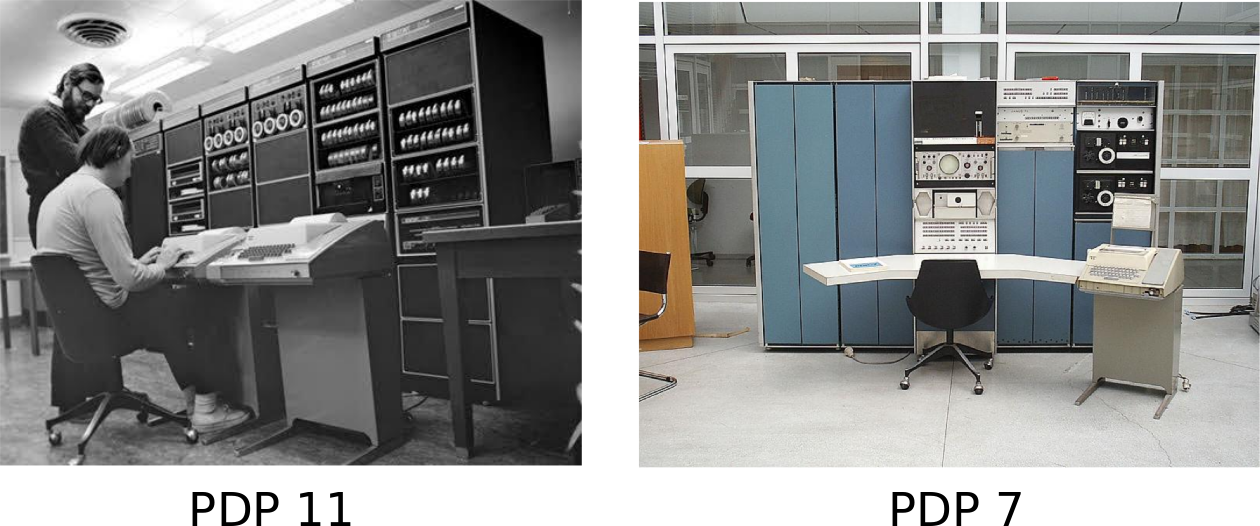

Unix

It began with a game

Why learn unix?

The unix philosophy

- Write programs that do one thing and do it well

- Write programs to work together

- Write programs to handle text

- Keep it simple stupid

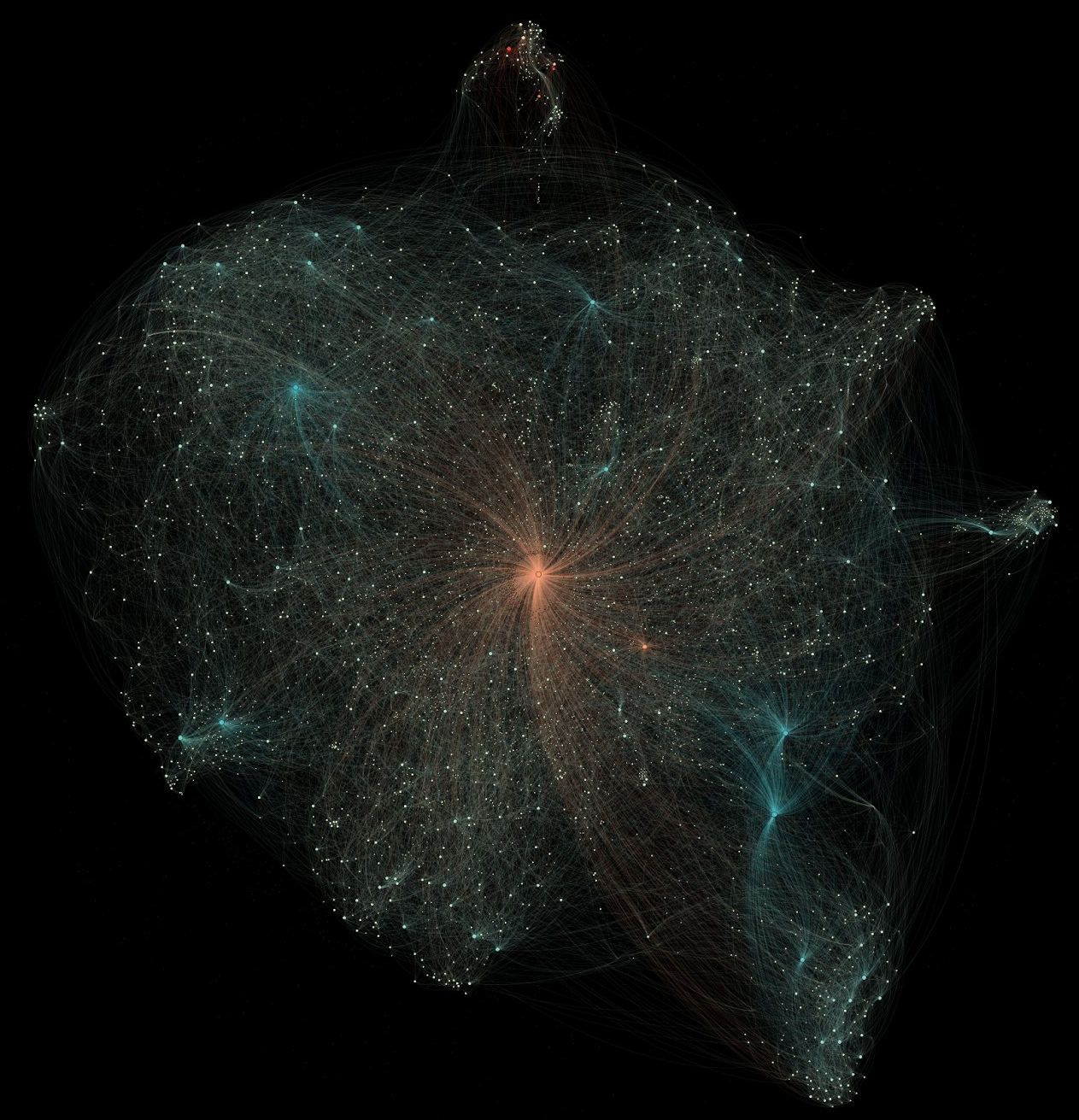

The open source universe

Version Control

But I don't need it!

- Because you in the past hates you in the now

- final2014revisedb2bbb.zip

- No, dropbox will not do

- Software: Subversion / GIT / Mercurial

- Websites: Sourceforge / Github / Bitbucket

- Your employer will be looking at your profile

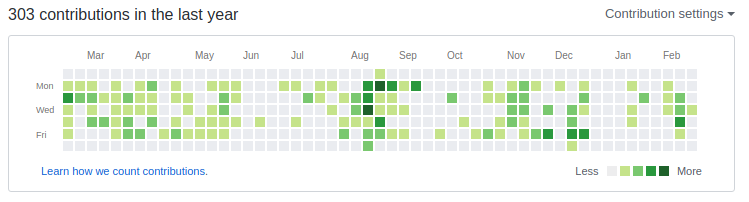

Github

Look at it grow

link

How to program

- Write small programs

- Glue together existing code

- Use version control

- If your code is slow, profile it

- Test your code

- Know at least 1 command line editor: VI, Emacs, nano

- Do not ignore misbehaving code

- Every time you use your mouse you have failed as a programmer